Nvidia’s Vera Rubin NVL72, announced at CES 2026, encrypts every bus across 72 GPUs, 36 CPUs, and the entire NVLink fabric. It’s the first rack-scale platform to deliver confidential computing across CPU, GPU, and NVLink domains.

For security leaders, this fundamentally shifts the conversation. Rather than attempting to secure complex hybrid cloud configurations through contractual trust with cloud providers, they can verify them cryptographically. That’s a critical distinction that matters when nation-state adversaries have proven they are capable of launching targeted cyberattacks at machine speed.

The brutal economics of unprotected AI

Epoch AI research shows frontier training costs have grown at 2.4x annually since 2016, which means billion-dollar training runs could be a reality within a few short years. Yet the infrastructure protecting these investments remains fundamentally insecure in most deployments. Security budgets created to protect frontier training models aren’t keeping up with the exceptionally fast pace of model training. The result is that more models are under threat as existing approaches can’t scale and keep up with adversaries’ tradecraft.

IBM’s 2025 Cost of Data Breach Report found that 13% of organizations experienced breaches of AI models or applications. Among those breached, 97% lacked proper AI access controls.

Shadow AI incidents cost $4.63 million on average, or $670,000 more than standard breaches, with one in five breaches now involving unsanctioned tools that disproportionately expose customer PII (65%) and intellectual property (40%).

Think about what this means for organizations spending $50 million or $500 million on a training run. Their model weights sit in multi-tenant environments where cloud providers can inspect the data. Hardware-level encryption that proves the environment hasn’t been tampered with changes that financial equation entirely.

The GTG-1002 wake-up call

In November 2025, Anthropic disclosed something unprecedented: A Chinese state-sponsored group designated GTG-1002 had manipulated Claude Code to conduct what the company described as the first documented case of a large-scale cyberattack executed without substantial human intervention.

State-sponsored adversaries turned it into an autonomous intrusion agent that discovered vulnerabilities, crafted exploits, harvested credentials, moved laterally through networks, and categorized stolen data by intelligence value. Human operators stepped in only at critical junctures. According to Anthropic’s analysis, the AI executed around 80 to 90% of all tactical work independently.

The implications extend beyond this single incident. Attack surfaces that once required teams of experienced attackers can now be probed at machine speed by opponents with access to foundation models.

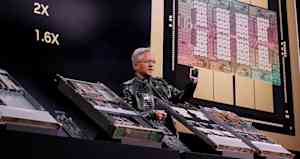

Comparing the performance of Blackwell vs. Rubin

|

Specification |

Blackwell GB300 NVL72 |

Rubin NVL72 |

|

Inference compute (FP4) |

1.44 exaFLOPS |

3.6 exaFLOPS |

|

NVFP4 per GPU (inference) |

20 PFLOPS |

50 PFLOPS |

|

Per-GPU NVLink bandwidth |

1.8 TB/s |

3.6 TB/s |

|

Rack NVLink bandwidth |

130 TB/s |

260 TB/s |

|

HBM bandwidth per GPU |

~8 TB/s |

~22 TB/s |

Industry momentum and AMD’s alternative

Nvidia isn’t operating in isolation. Research from the Confidential Computing Consortium and IDC, released in December, found that 75% of organizations are adopting confidential computing, with 18% already in production and 57% piloting deployments.

“Confidential Computing has grown from a niche concept into a vital strategy for data security and trusted AI innovation,” said Nelly Porter, governing board chair of the Confidential Computing Consortium. Real barriers remain: attestation validation challenges affect 84% of respondents, and a skills gap hampers 75%.

AMD’s Helios rack takes a different approach. Built on Meta’s Open Rack Wide specification, announced at OCP Global Summit in October 2025, it delivers approximately 2.9 exaflops of FP4 compute with 31 TB of HBM4 memory and 1.4 PB/s aggregate bandwidth. Where Nvidia designs confidential computing into every component, AMD prioritizes open standards through the Ultra Accelerator Link and Ultra Ethernet consortia.

The competition between Nvidia and AMD is giving security leaders more of a choice than they otherwise would have had. Comparing the tradeoffs of Nvidia’s integrated approach versus AMD’s open-standards flexibility for their specific infrastructures and business-specific threat models is key.

What security leaders are doing now

Hardware-level confidentiality doesn’t replace zero-trust principles; it gives them teeth. What Nvidia and AMD are building lets security leaders verify trust cryptographically rather than assume it contractually.

That’s a meaningful shift for anyone running sensitive workloads on shared infrastructure. And if the attestation claims hold up in production, this approach could let enterprises extend zero-trust enforcement across thousands of nodes without the policy sprawl and agent overhead that software-only implementations require.

Before deployment: Verify attestation to confirm environments haven’t been tampered with. Cryptographic proof of compliance should be a prerequisite for signing contracts, not an afterthought or worse, a nice-to-have. If your cloud provider can’t demonstrate attestation capabilities, that’s a question worth raising in your next QBR.

During operation: Maintain separate enclaves for training and inference, and include security teams in the model pipeline from the very start. IBM’s research showed 63% of breached organizations had no AI governance policy. You can’t bolt security on after development; that translates into an onramp for mediocre security design-ins and lengthy red teaming that catches bugs that needed to be engineered out of a model or app early.

Across the organization: Run joint exercises between security and data science teams to surface vulnerabilities before attackers find them. Shadow AI accounted for 20% of breaches and exposed customer PII and IP at higher rates than other breach types.

Bottom line

The GTG-1002 campaign demonstrated that adversaries can now automate large-scale intrusions with minimal human oversight at scale. Nearly every organization that experienced an AI-related breach lacked proper access controls.

Nvidia’s Vera Rubin NVL72 transforms racks from potential liabilities into cryptographically attested assets by encrypting every bus. AMD’s Helios offers an open-standards alternative. Hardware confidentiality alone won’t stop a determined adversary, but combined with strong governance and realistic threat exercises, rack-scale encryption gives security leaders the foundation they need to protect investments measured in hundreds of millions of dollars.

The question facing CISOs isn’t whether attested infrastructure is worth it. It’s whether organizations building high-value AI models can afford to operate without it.